Since 2019, Australia’s Department of Industry, Science and Resources has been working to make the country a leader in “safe and reliable” artificial intelligence (AI). The most important of these is a voluntary policy based on the eight principles of the nature of AI, including “humane results”, “justice” and “observation and interpretation”.

Each subsequent national guide on AI has changed these eight principles, urging business, government and academia to follow them. But these voluntary policies have no influence on organizations that develop and deploy AI systems.

Last month, the Australian government began discussions on a proposal that also involved some voices. Consent “free consent […] it is no longer enough”, it also spoke of the “high risk AI legal environment”.

But the basic principle of autonomy remains strong. For example, it is up to AI developers to determine whether their AI systems are at high risk, by looking for risks that can be described as more common. AI systems.

If this great challenge has been met, what steps should be taken to protect it? In most cases, companies only need to demonstrate that they have internal processes in place that are compatible with AI principles. An idea is best known, then, for what it does no including. No monitoring, no consequences, no rejection, no revision.

But there is another, ready-to-right version of Australia’s AI adoption. It is based on another technology of great importance to the world: genetic engineering.

A different example

Genetic engineering is responsible for genetically modified organisms. Like AI, it brings anxiety to more than 60% of people.

In Australia, it is regulated by the Office of the Gene Technology Regulator. This agency was established in 2001 to achieve technological development in agriculture and health. Since then, it has become an example of a highly experienced, well-managed professional who focuses on a specific technology with great results.

Three factors have ensured the success of gene technology regulators nationally and internationally.

First, it is a single mission organization. It regulates activities with genetically modified organisms:

to protect the health and safety of people, and to protect the environment, by recognizing the risks posed by genetic engineering.

Second, it has a strategic decision-making system. Therefore, the risk assessment of any use of genetic engineering in Australia is driven by sound expertise. It also protects the review from political and corporate influence.

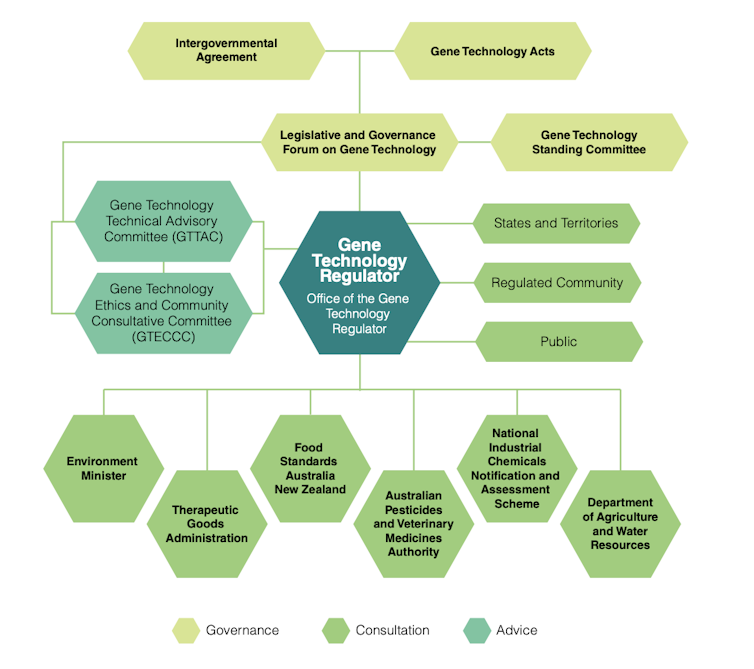

The Administrator is informed by two joint expert bodies: the Technical Advisory Committee and the Ethics and Community Consultative Committee. These organizations are supported by Institutional Biosafety Committees that support risk management during ongoing research and more than 200 commercial organizations licensed to use genetic technology in Australia. This equates to best practices in food safety and drug safety.

Office of The Gene Technology Regulator, CC BY

Third, the manager always incorporates public opinion into his risk assessment process. It does so clearly. Everything to do with genetic engineering must be approved. Before being released into the wild, a thorough consultation will enhance monitoring and monitoring. This ensures the safety of many people.

Managing high-risk technologies

Together, these factors explain why gene technology regulators in Australia have been so successful. He also highlights what is missing in many emerging trends in AI management.

AI regulatory governance often involves an impossible balance between protecting people and supporting companies. Like genetic code, it works to protect against danger. In the case of AI, these threats can be to health, the environment and human rights. But it also wants to “expand the opportunities that AI offers to our economy and society”.

Second, the AI regulations currently being proposed provide risk assessment and management for providers of AI products. Rather, it should establish national evidence, informed by a range of scientific, social and cultural expertise.

The argument goes that AI is “out of the bag”, which can be used with too many and too few calculations to be controlled. However the methods of molecular biology are also well in the pocket. The gene tech regulator still oversees all technology activities, while still working to classify certain activities as “illegal” or “dangerous” to support research and development.

Third, people don’t have enough opportunities to agree to deal with AI. This is true regardless of whether it involves usurping the past of all our minds to create AI systems, or channeling them in ways that take away dignity, autonomy and justice.

The lesson of more than two decades of gene editing is that it does not stop the development of promising new technology until it has demonstrated a track record of non-destructive use for humans and the environment. In fact, it saves.

#Australia #led #genetic #engineering #years #Heres #applied